UVTrack360: UWB-aided Tracking in 360° Videos

with Open-Vocabulary Descriptions

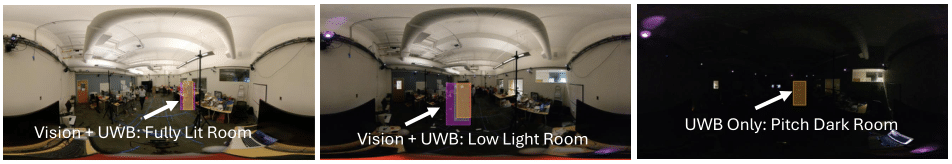

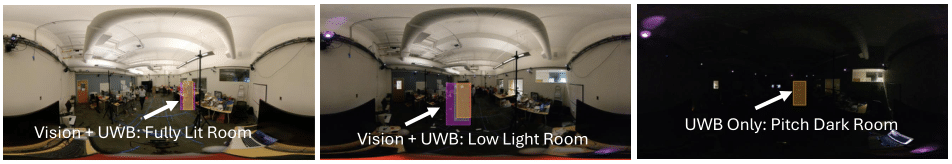

Computer vision based techniques are frequently used to locate a subject of interest in a video, so that video feature enhancements, blurring, and directional microphone-based audio capture can be enabled. This has applications in film-making, security, and privacy. The subject's actual location in the video feed is also important for audio post-processing, say in Dolby Atmos, where dubbed audio is associated with the subject's movements for realistic spatial audio. Detecting where a subject is in a video feed is nontrivial because the lens field of view must be mapped to the 3D space, the subject needs to be identified (potentially using open-vocabulary descriptions of the person), sometimes from several other subjects, and then tracked continuously, even if the subject might be occluded in some frames by other objects. In this paper, we ask: can the subject wear a wireless localization tag to simplify the task? We observe that the accuracy of subject localization in the video feed improves, the subject can be located even in cases where computer vision would fail (low-light conditions), and the compute time required to find the subject in the frame reduces, thereby reducing the detection latency of tracking using video alone.